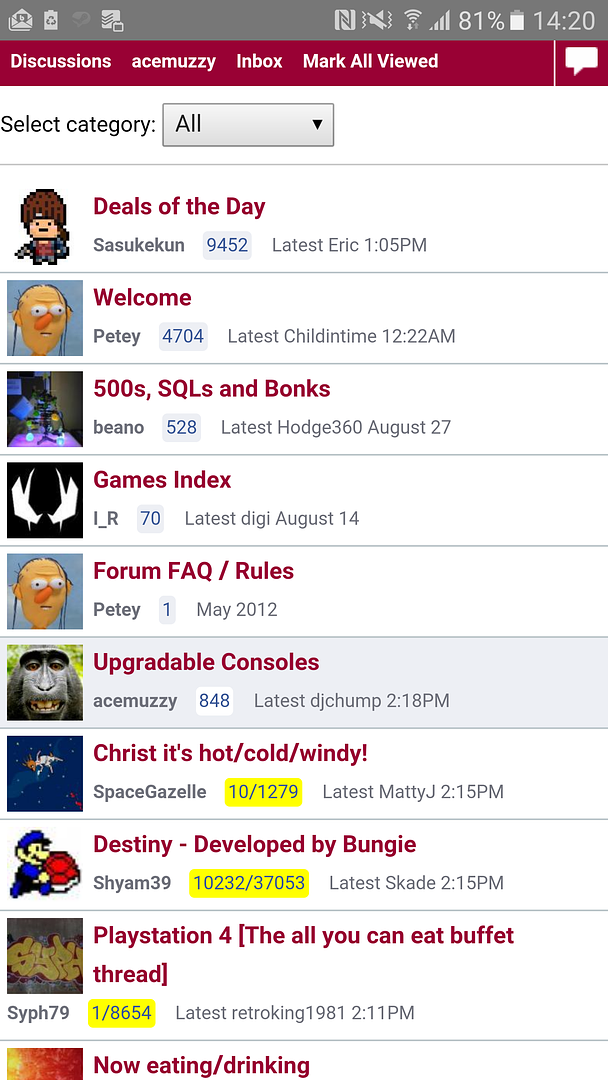

Upgradable Consoles

-

Right sreiously guys

How the hell does HDR mean an extra yellow pixel

I'm getting the feeling people are talking shit, surely HDR means High Dynamic Range, the best way to achieve this is to have deep, glorious, dark, inky, quasar producing blacks

That has FA to do with your console really

I can see how a yellow pixel element could help to make colours less harsh on the eye, and help for the old circaddian rhythms, blue light bad and all that but still, it sounds like some utter bullshit somehwere"I spent years thinking Yorke was legit Downs-ish disabled and could only achieve lucidity through song" - Mr B -

I just thought he meant the yellow bit on the forum has looked extra nice and it's being attributed to HDR.

Interestingly my printer has 5 coulours (I think and extra cyan and magenta) so extra colour pixels might help. -

http://support.xbox.com/en-IE/xbox-one/console/hdr-on-xbox-one-s

Seems a yes to the slim anyway having HDR. I think I read somewhere that the HDR is a software rather than technical issue so maybe both consoles can do it but the Scorpios and Pros will do it better?

edit: you still need a screen that can show it, which backs up Cockbeards point I think

As an aside on Sonys motivations, I think that yes, its a good way to sell new tech and keep people interested but given that the tech isn't the money maker I think it's overall more about keeping peoples gaming dollars focused on the playstation brand and games. There's no problem having an xbox one or pc and playing the odd game that isnt on PS4 but why buy the multi format game for xbox one when you can have the best shiny version on ps4 pro (pretty much why many people went for ps4 in the first place if I remember correctly)

The PC market must be taking some money from them though. PCs are getting easier to use (most parts are simple plugs in now) Windows and Steam on a single system gives you a great system. Emulation gives yiu the classic stuff, even if it is illegal many will use it, and with the W10/ xbox one link up, it broadens the base for a developer to sell into. I'm not saying they've picked the best option but I can see why they are doing it.

I wouldnt be surprised if the recent announcement of PS Live on PC (or whatever its called) is part of their effort to try and keep a handle on the PC world.SFV - reddave360 -

Y'all realise muzzy was messing about, right?

-

@Cocko:

http://www.trustedreviews.com/opinions/hdr-tv-high-dynamic-television-explained

tl;dr it's not just about the blackness of the black. -

djchump wrote:so racist.

There's a Black Pixels Matter joke waiting to be made but I'm not brave enough to check my white privilage to do it.SFV - reddave360 -

Wrongdjchump wrote:Y'all realise muzzy was messing about, right?mistercrayon wrote:I just thought he meant the yellow bit on the forum has looked extra nice and it's being attributed to HDR.

This. The yellow bits here are definitely brighter than bright on my S6. Though googling now suggests it's only a camera thing so am confused.

-

Pfft, doesn't look HDR to me.

-

You mean you've got an S7 (that somehow hasn't exploded yet?) and the screen is brighter than your old S6?

Could that not be, you know, the screen being brighter rather than HDR?

Oh wait, right, they've increased the gamut for the note 7: http://www.pocket-lint.com/news/138387-what-is-mobile-hdr-why-samsung-s-note-7-screen-is-a-window-into-high-dynamic-range-s-future

Cool. But it not matching the 10 bit TV HDR kinda reinforces that "HDR" as a term doesn't seem to be standardised right now. -

AJ wrote:It's because it's an OLED screen.

LOLSFV - reddave360 -

Oh right. I was confused because on desktop, that's maroon. And actually says 1 new.

-

Desktop Wanker!

-

Measured in nits, this is already fucking ridiculous marketing bullshit. HDR and Ultra HD Premium are both utter bollocks

You can already measure brightness in lumens, and then the contrast ratio is all that matters, my plasma was around 100000:1 and thats plenty for anyone"I spent years thinking Yorke was legit Downs-ish disabled and could only achieve lucidity through song" - Mr B -

I thought you'd understand what HDR is. How can you say it's utter bollocks?

-

Of course I understand what the words mean and how they relate to sound recording, image/video capture and image/video display

What I mean is that this is all marketing guff with no real stuff inside, I wonder what contrast ration the spec of Ultra HD Premium defines, or how many "nit"s who the hell made that up. It's hardly a standard measurement of light output is it, we have candels and lumens, why pull some random word out of their arse? That's why I refuse to trust it

What would you think if Ford launched a new car and said said it did 7000 miles to the gloople (not a typo)

It's just bollocktalk"I spent years thinking Yorke was legit Downs-ish disabled and could only achieve lucidity through song" - Mr B -

They're SI, not marketing guff bollocktalk, so as such, very much are the standard measurements.

nit is colloquial for the SI unit for luminance = cd.m^2.

lux is the SI unit for illuminance (i.e. luminous flux density/luminous exitance) = lumen.m^2

Poynton p.604 and 607 for the defs. -

From the sounds of it, the way they're talking about it may well be bollocks. It's got definite value, though.

For example, an outdoor scene in SDR might have a sky where you can't make out much detail to it because there are only a small number of tones between it's lowest and highest brightness. With HDR you'll be able to make out a lot more detail because the range of colours it's able to display are higher. It's a bit like 8 bit vs 16 bit graphics. -

Eeeh, well, from what I've seen it's all kinda up in the air - especially if an AMOLED screen is being called "HDR" simply because of the wider colour gamut. If the android OS on it is still only rendering out at the standard 8bit per colour channel, then IMHO that's pretty misleading to use the term "High Dynamic Range" as the rendering backend is still the same range before it hits the screen. Only if the backend is rendering out at or greater than 8bit per channel should it be called HDR IMHO.

-

And in the case of these consoles, that's exactly what's happening, AFAIK. I don't think we should be down on them because marketing in general is fucked.

-

Oh yeah, I'm not being down on the consoles - game rendering is the easiest thing to make HDR as most modern game backbuffers/G-buffers are already HDR and then get tonemapped down to 8bit per channel colour before present to screen.

It's the prolifieration of screens and tech being touted as "HDR" this and that which is a bit of a wild west in my eyes at the moment - like the old "HD Ready" v "Full HD" etc. - until it settles out to a de facto format winner some time down the line. -

Makes sense. I don't pay much attention to it all, so have no idea what's being sold as what.

-

HDR isn't just about the screen it is about the render output. If the output has a shit range of colour it doesn't matter how good the TV is it will be hamstrung by it.

The TV may be able to display pure black as pure black but the range is more about a smooth gradient of colour. If you take an image with a high contrast, say a torch in a cave, there will be a glow around the torch that should look like a smooth gradient, if you then begin to fiddle with the contrast of that image the glow will begin to looked stepped in colour. A higher colour range increases the amount of steps as there are more colours available. It is kind of like anti-aliasing for colour in a way.

A TV that supports HDR will be able to process the higher bitrate that is required to increase the range, in this case there will be roughly 25% more data being piped through.

That being said 10bit doesn't sound much of an improvement to me. I save out all renders I do at at least 16bit to maintain enough data to properly tweak contrasts in post-production. -

Right, so 8bit is 256 shades per channel, 10bit is 1024. That's four times the range (yeah, should have realised that without working it out). Sounds like a fair improvement to me.

-

djchump wrote:They're SI, not marketing guff bollocktalk, so as such, very much are the standard measurements. nit is colloquial for the SI unit for luminance = cd.m^2. lux is the SI unit for illuminance (i.e. luminous flux density/luminous exitance) = lumen.m^2 Poynton p.604 and 607 for the defs.

Everyday's a schoolday

Happily corrected, I'd never heard of nits before, except the things you get in your hair at infants"I spent years thinking Yorke was legit Downs-ish disabled and could only achieve lucidity through song" - Mr B -

And that's per channel, so when it's RGB it's 16,777,216 colours for 8bpc vs 1,073,741,824 colours for 10bpc.AJ wrote:Right, so 8bit is 256 shades per channel, 10bit is 1024. That's four times the range (yeah, should have realised that without working it out). Sounds like a fair improvement to me.

Not that you'll be able to distinguish all that unless you're a tetrachromat or mantis shrimp. IMHO the extra range is mostly handy for getting rid of banding in the low blacks and blues in night scenes etc. -

Tbh I'd not heard of em either before all this - I checked up in Poynton as that's my tv and colour format bible. Kinda confusing with exit radiance being over solid angle of sphere (which is normally what you'll see quoted for point lights) whereas this is in specific direction from flat surface (like an area light).cockbeard wrote:djchump wrote:They're SI, not marketing guff bollocktalk, so as such, very much are the standard measurements. nit is colloquial for the SI unit for luminance = cd.m^2. lux is the SI unit for illuminance (i.e. luminous flux density/luminous exitance) = lumen.m^2 Poynton p.604 and 607 for the defs.

Everyday's a schoolday

Happily corrected, I'd never heard of nits before, except the things you get in your hair at infants

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- All Discussions2,718

- Games1,881

- Off topic837